BLOG

BLOG

A Denial of Service (DoS) attack will prevent your legitimate users from accessing your API. The attack could be physical, such as unplugging network cables, but a Distributed DoS is more prominent. It involves generating a volume of user requests from various machines to overwhelm your servers. DDoS attacks can result in a loss of $50,000 of revenue due to downtime and mitigation.

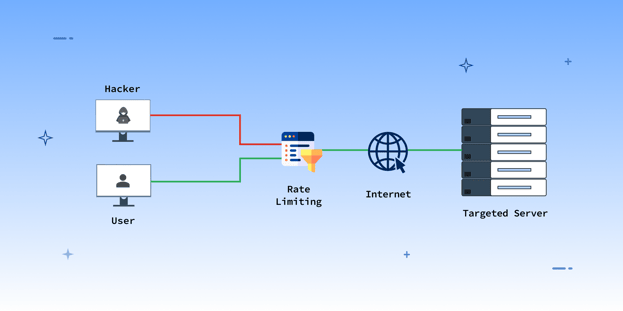

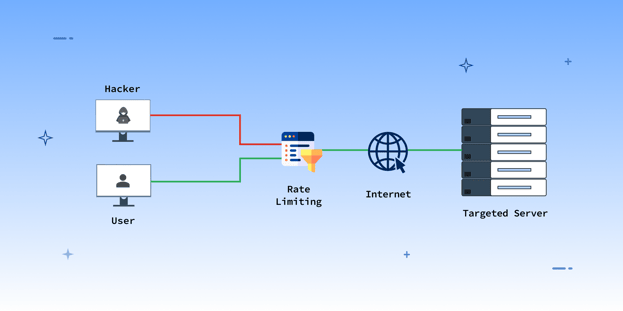

From a prevention point of view, it is vital to have a clear understanding of different methods that can be employed to prevent the issue at stake. One such method is Rate Limiting.

Within this blog, we’ll talk about the basics of Rate Limiting- What it is, the various types and algorithms, and how you can employ it to your advantage to develop a suitable defense mechanism against DDOS attacks.

Tip- It would help to employ rate-limiting in the early stages, ideally at a reverse proxy or load balancer, or before requests communicate with your API servers.

Rate Limiting is a technique that restricts excessive traffic by specifying the maximum number of requisitions that can be processed through a system, application, or API per unit of time, i.e., per second, minute, or hour. It is also implemented to prevent fake and redundant requests from being processed to reduce the risk of system overuse and ensure that it is equally and reasonably available for all users.

If the specified rate limit exceeds, the API will stop responding and may return an error code. Rate Limitation is the key to and ensure system security by preventing the abuse of server resources.

You can prevent Denial of Service Attacks by rate-limiting, and the techniques could depend upon the purpose of your service, the endpoints you provide, and your customers’ needs and behavior. Here are the three broad techniques that you can implement:

It involves tracking users’ API keys or IP addresses and identifying the number of requests made in a particular time frame. When users make requests over and above this identified limit, the application will deny the excessive requests till the timeframe set by the rate-limit resets.

Rate Limits can also be set based on location, i.e., different rate limits for different regions for a specific timeframe. For instance, developers might notice that users of a particular region are less active between 1 pm- 4 pm and set up a lower rate limit for this specific region for this timeframe.

When a user sends requests to an API, it might be processed by any one of the several servers. Server Rate Limits allow the developers to set different rate limits for different servers. This helps in load-sharing and ensuring that excessive requests do not flood servers.

Tip- Setting up a rate limit that is too low could affect your genuine users. A safe way to approach this is to inspect the logs and establish a baseline rate of genuine requests and traffic.

Rate Limiting works by identifying an IP address from where the requests to API generate and calculating the number of requests made in a particular timeframe and the time lapsed between requests. If an IP address makes too many requests (over and above the identified requests) within the specified timeframe, the rate-limiting approach restrains the IP address and doesn’t process these excessive requests till the next timeframe.

It will display an error message and tell individual users making too many requests to try again after some time.

There are several rate-limiting algorithms that you can apply based on different scenarios and safeguard your network from excessive traffic and ensure uninterrupted service availability.

Here’s a brief list of them-

This algorithm restricts the number of requests accepted during a fixed timeframe, starting from a specified time.

For instance, a server’s component might implement an algorithm that accepts up to 100 API requests in a minute, starting from 8:00 am. So, the server will not accept more than 100 requests between 8:00 and 8:01. This window will commence again at 8:01, allowing another 100 requests till 9:02, and so on.

There are no timeframes in this algorithm. It works by defining a fixed length of the request queues. Requests are catered on a first-come, first-served basis, and each new request is placed at the end of the queue.

The server will keep accepting the requests until the queue reaches its specified length, after which the additional requests are dropped.

The rate-limit window is broken into many small windows, and requests are tracked across those small windows.

Additionally, there is no preset timeframe here. Instead, this algorithm starts the timeframe only when a user makes a new request. This helps to prevent processing more limits than set.

E.g., if the rate limit is 100 requests/minute, a user can send a request at 7:00:59 and another 100 at 8:00:01. Hence, a user can send 200 requests within 2 seconds. On the contrary, if sliding window rate limiting is implemented, the user sending 100 requests at 8:00:01 am will only be able to send another 100 after 8:01:01 am.

This algorithm works by allowing for equal gaps between the requests. This ensures that users don’t make all requests in a very short period and hence prevents sudden load on servers.

For instance, if the rate limit is 600 requests/ hour, then users can’t make all 600 requests within a few seconds or minutes; rather, the requests will have to be spaced equally, i.e., 3600/600 seconds = 0.166 seconds. Hence, any request made before 0.166 seconds of the previous one will be rejected.

However, the algorithm allows for a specific burst, say 100. So, a user will be able to make 100 additional requests. These 100 requests can be within 0.166 seconds, all at once, or distributed across multiple windows.

Rate Limiting Solutions can prevent Denial of Service Attacks. They will help you define an absolute timeframe, an ingress data rate limit beyond which all connections will drop out, and prevent bandwidth exhaustion.

Use this guide to determine which technique suits your organization the best, decide the algorithm to be employed, safeguard your systems, and ensure fair availability to all users.

It is a cyberattack that happens when the attacker makes it impossible for legitimate users to access computer systems and networks by flooding the servers with a volume of fake traffic.

Denial of Service attacks aims to render a system inaccessible for genuine users by sending rapid requests to the target server and overloading its bandwidth.

Hackers never rest. Neither should your security!

Stay ahead of emerging threats, vulnerabilities, and best practices in mobile app security—delivered straight to your inbox.

![]() Exclusive insights. Zero fluff. Absolute security.

Exclusive insights. Zero fluff. Absolute security.

![]() Join the Appknox Security Insider Newsletter!

Join the Appknox Security Insider Newsletter!